Dataiku for Tech Experts

Quick experimentation and operationalization for machine learning at scale.

"The most beneficial thing about Dataiku is having everything in one place, so you don’t have to go from one program to another to another and have them work all at the same time. Dataiku takes away that hassle."Ayca Kandur, Data Scientist Aviva

Enjoy Diverse Coding Environments & Git Integration

To build pipelines and ML models, use Dataiku’s visual tools or write custom code in Python, R, Scala, Julia, Pyspark, and other languages, developed and edited in your favorite Integrated Development Environment (IDE).

Git integration delivers project version control and traceability, plus it enables teams to easily incorporate external libraries, notebooks, and repositories for both code development and CI/CD purposes.

Capitalize on Your Existing Infrastructure

Dataiku’s infrastructure-agnostic, modular architecture allows it to run as a SaaS application, on-premises, or in your cloud of choice, integrating with native storage and computational layers for each cloud.

Accelerated instance templates on Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure streamline the administration and management of your Dataiku implementation. Modern pushdown architecture allows you to take advantage of elastic and highly scalable computing systems, including SQL databases such as Snowflake, Spark, Kubernetes, and more.

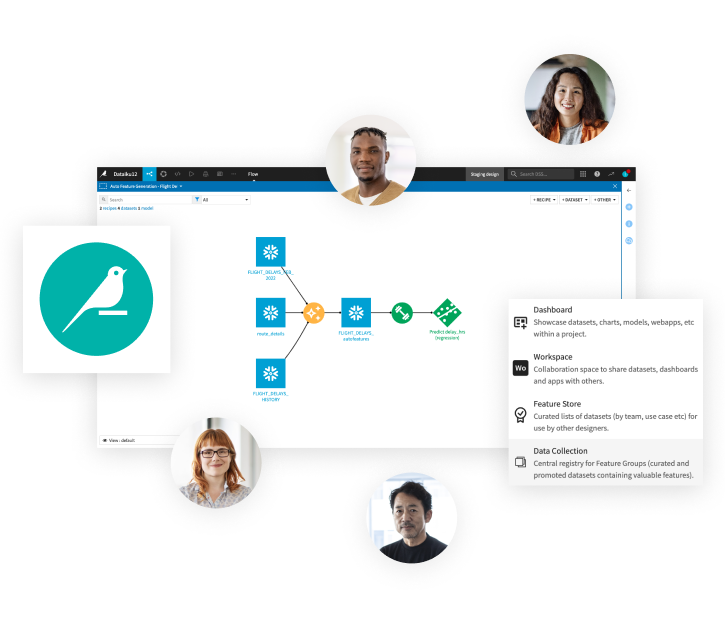

Operationalize Pipelines & Models With Ease

The Flow in Dataiku represents the pipeline of data transformations and movement from start to finish. A timeline of recent activity and project bundles make it easy to track changes and manage project versions in production, and flow-aware tooling helps engineers manage data dependencies and schema consistency in evolving data pipelines.

With Dataiku, automate repetitive tasks like loading and processing data, running batch scoring jobs, allocating compute resources, data quality checks, and much more.

One Single AI Platform From Design to Delivery

Deploy batch data pipelines or deliver real-time model scoring with API services, including built-in versioning and rollback across all your dev/test/prod environments.

Automatic performance monitoring and model retraining ensure deployed models remain healthy. When a model does degrade, robust drift analysis and model comparisons enable you to make informed decisions about the right model to deploy in production.

Build Models Faster with AutoML

Leverage Dataiku AutoML to quickly create best-in-class models with automated experiment tracking across multiple combinations of algorithms and hyperparameters. Accept the defaults or take full control over all feature handling and training settings, including writing your own custom and deep learning models.

Build and evaluate computer vision, time series forecasting, clustering, and other specialized ML models in a powerful framework that includes interactive tooling for performance reports, model explainability, and what-if analysis.

Discover & Reuse Assets for Maximum Efficiency

Through central hubs like Dataiku’s catalog, feature store, project homepage, and plugin store, teams can easily discover and reuse existing projects and data products to avoid starting from scratch each time.

Coders will appreciate the shared bank of useful code snippets and libraries — including those imported from Git — to expedite scripting tasks and ensure everyone is applying consistent methodology for data manipulation.

Get Started with Dataiku

Start Your Dataiku 14-Day Free Trial

or Install the Free Edition of Dataiku